Summary:

AI interviews offer faster feedback at scale, but they’re not a replacement for in-depth, human-led semistructured interviews.

In the last year, unmoderated interviews became available for the first time. AI can now facilitate an interview for you. But how well do they really work?

We conducted a study with ten participants and 2 AI interviewers — Marvin and UserFlix — to learn the answer.

TL;DR

- AI-moderated interviews can help you collect structured input at scale. They’re best when you already know what to ask — like product feedback, recruitment screening, or multilingual interviews without translators.

- They follow the script, not the insight. They stick to your script and may probe when an answer is short or unclear (or when you told them to), but don’t chase unexpected insights, skip, or reframe weak or irrelevant questions.

- The experience can feel “almost conversational,” but still unnatural. Summaries help participants feel heard, yet interruptions, long pauses, repetitive questions, and poor time management are common.

- Use them to supplement, not to replace human moderation. For messy problem spaces, high-stakes decisions, or studies that require deep domain knowledge and real-time judgment, human interviewers still outperform. These tools are early — they’ll improve — but they’re not yet suited for semistructured, in-depth discovery interviews.

How AI-Moderated Interviews Work

An AI-moderated interview is a live interview with a participant facilitated by an AI system with a synthetic voice.

Currently, most AI interviewers on the market are voice-only. In the future, they may incorporate a human-like avatar.

Like in unmoderated usability testing, participants can complete their interview at a time of their choosing, without a human facilitator. This scheduling flexibility makes it convenient for both participants and researchers.

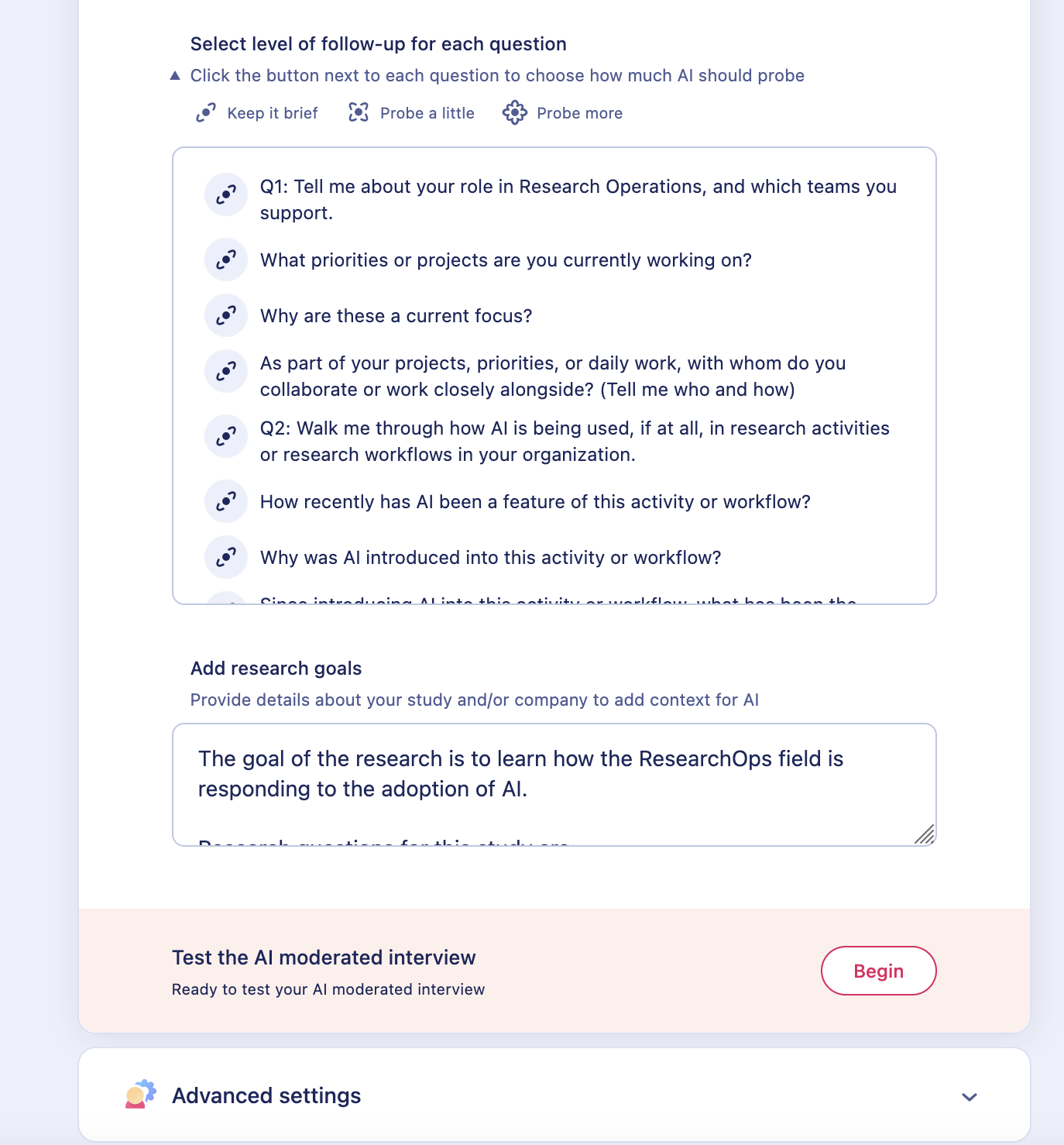

To set up an AI interview, you must share your research goals or an interview guide with the tool. UserFlix has an AI assistant that helps you craft an interview guide, and Marvin allows you to specify the level of probing for each question.

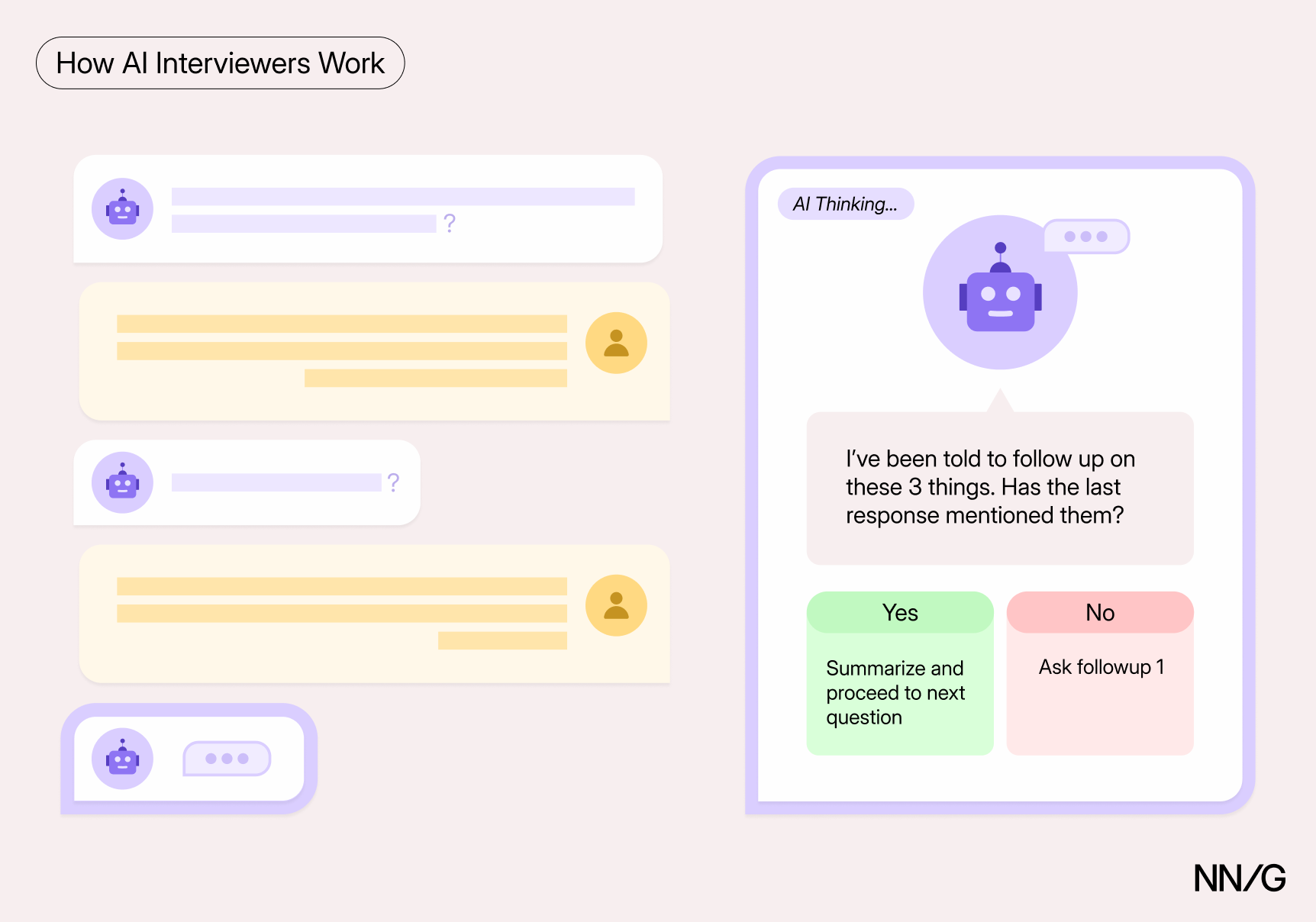

The AI interviewer asks the questions in your guide and “listens” to the response. The system can follow up on the last response, if needed, based on your guide or research goals. For example, you might instruct an AI interviewer to request more detail if a response to an important question is very short.

Can AI Interviewers Build Rapport?

Rapport is built when participants feel they are understood and accepted by the interviewer, which allows for free flowing, honest conversation. To build rapport, the facilitator needs to listen carefully to what the participant says, watching their nonverbal cues such as body language and facial expressions, and also appropriately communicating to the participant that they have are being heard and understood.

AI interviewers don’t have faces, and they can’t read facial expressions. As any good interviewer knows, we gather and communicate a great deal of information not just from what is said, but from how it’s said and from nonverbal cues (like facial expressions).

As one participant in our study put it:

“If I look … [at] you like this [pulling a displeased face] you’re going to notice if something’s wrong or if I’m trying to tell you something or expressing something.”

Naturally, we were curious to see how well a synthetic voice can build rapport with real participants.

Participants Felt Heard

Despite the lack of nonverbal feedback from the interviewer (like nodding, smiling, or eye contact) some participants felt they had built rapport with the AI interviewer.

“I remember this was one of the [survey] questions. Do you feel understood? (…) yes, I did. Definitely. This was the most outstanding (…) idea I had about the overall experience.”

“It did a good job of (…) mirroring back to me what I had said so that I felt (…) heard and understood (…) that felt reflective of what a real conversation feels like with another human.”

The ability that AI had to condense and summarize the thoughts shared by participants was the biggest contributing factor and was the most common positive piece of feedback given about the experience. AI interviewers made participants feel heard, and some participants were impressed with how well and concisely the AI interviewers summarized their thoughts.

Here are some examples of the AI interviewer’s summarization:

UserFlix: “Thank you for that clear rundown. It sounds like you’re deeply embedded with your product managers, UX designers, content designers, and engineers, ensuring that everyone stays aligned and informed. Now I’d love to shift gears a bit…”

Marvin: “Yeah, I get your point. That kind of deep in-depth psychological nuance reading, body language, adapting in the moment, AI’s not quite there. It’s clear that human expertise is still crucial for that level of research. Thanks for sharing that example…”

Summarization is an important facilitation technique. However, when human interviewers use summarization, they paraphrase and then check in with participants to ensure that their understanding is correct. Unfortunately, neither AI interviewer gave participants a chance to weigh in; they summarized and moved swiftly on to the next question.

One participant accidentally interrupted the AI interviewer constantly to confirm the summary, making the conversation stilted and awkward. She explained,

“I wasn’t sure whether the AI was looking for confirmation (….) [it] changed the topic right away. (…) I would have expected (…) a little bit of a pause to, you know, kind of allow me to say ‘Yeah, (…) that’s how I meant it’.”

At times, the AI interviewer even expressed empathy to participants. For example, UserFlix reassured a participant who apologized for the length of her answers to the questions.

Participant: “Oh my gosh, I feel like my answers are really long, I’m sorry.”

UserFlix: “You’re doing great, and I appreciate all the detail. This richness helps paint a full picture of how AI is impacting your work. Let’s move to the final question…”

However, for the same script, the performance of both AI interviewers varied widely across interviews. Some interviews featured lengthy summarizations and overly enthusiastic responses; others felt cold, brief, and lacked probing. This inconsistency may have been caused by product updates made during the study period, which spanned several weeks.

As a result, interview length varied dramatically, ranging from 13 to 56 minutes because neither platform allowed us to set a target interview duration. Experienced interviewers understand the importance of balancing in-depth inquiry with consideration for participants’ time. While some participants are more verbose than others, skilled interviewers can adapt the number of questions to remain within the scheduled session period.

However, the AI interviewers were unable to make such adjustments.

They were unable to “read the room.” They struggled to recognize when a question had been sufficiently explored and when it was time to move on.

Participants Still Craved Nonverbal Feedback

Despite feeling heard by the AI interviewer, participants still craved something to look at. Without nonverbal backchannel cues — the small facial reactions and gestures that signal “I’m listening” — the conversation felt a bit unnatural.

“I think I was expecting to see some kind of human form or human face (…) or something in the middle (…) rather than being such a frigid (…) experience only with that circle icon.”

“It’s because there wasn’t any human expression associated to it (….) I couldn’t see anything reacting.”

“There’s nothing really to look at except, like, this glowing orb. I don’t know how to respond to that.”

The lack of lip-movement cues also made it hard to comprehend the question, especially when it followed a long summarization.

“I’m not seeing (…) someone talking to me or seeing their mouth move so I can, like, fully comprehend what it’s saying.”

However, some participants were not sure they’d be comfortable with an AI avatar. One participant who had never experienced an AI interviewer was worried that she wouldn’t be able to tell if she was speaking to a human

“I was afraid that it’s going to be so realistic (…) that maybe I won’t be even able to notice whether it’s an AI or a real human.”

Her existing concern with deep fakes made it particularly important for her to know whether the interviewer was a real person.

“My whole world is going to get really messy if I cannot tell the difference between what’s real and what’s artificial. That’s a fear of my real life as well.”

Participants Had Privacy Concerns

Just as with a human interviewer, participants needed time to become comfortable with the AI interviewer before they were willing to share information. Unfortunately, because AI interviews started somewhat abruptly, without the usual introduction about how recordings will be used or the length of the interview (information that had been included in a consent form that participants had seen prior to the interview), participants did not have enough time to get used to the interviewer or get to trust it.

As a result, a couple of participants admitted that they held back information.

“It asked if I can share a little bit more about myself and my current role. And I was like, oh, okay, I’m not going to mention where I work, but (…) if you would ask me, I would have told you (…) if you go to LinkedIn, you will see it. So, it’s no secret. I don’t know why, but I kind of felt that I’m not comfortable sharing that information with Marvin.”

The participant felt that, with a human interviewer, there’s some accountability for using those insights.

“[I have] this feeling that my information is going to be handled well because it’s going to be a person working with all these insights (….) But with AI, since it’s artificial, I don’t how it will use the information.”

AI interviewers can boost confidence and trust by introducing research sessions properly. Researchers should be able to give AI interviewers a verbatim script or a series of important talking points to introduce the session.

A participant volunteered what she would like to hear the AI interviewer say at the beginning of the interview to make her feel better:

“I am an AI [interviewer] (….) I’m representing Maria (…) Your information is totally confidential…”

The Conversation Is Awkward

Although most participants felt heard and understood, and were pleasantly surprised by the experience, many still found the conversation awkward and unnatural at times.

Only 3 (out of 10) participants agreed with the statement “The conversation felt natural” in the post-interview questionnaire. And only 5 participants agreed with the statement “I felt comfortable during the interview.”

Across the two AI interviewers, participants were interrupted, experienced lengthy pauses after answering a question, and were asked repetitive questions.

The two AI interviewers had different problems when it came to responding to participants. UserFlix often interrupted participants, while Marvin had lengthy pauses that made the conversation feel stilted. On occasion, Marvin failed to ask questions, prompting participants to request the next question! One participant even began multitasking when Marvin fell silent. UserFlix was too eager to ask another question, so some participants felt they had to rush to express their thoughts or were cut off from expressing more. Only some participants were bold enough to interrupt the AI to finish their thoughts.

“Sometimes also I wanted to make a pause because I was not ready for some of the questions, so I needed to think back and I was afraid to make too long of a silence (….) And sometimes it moved on before I wanted it to.”

Because AI interviewers can’t read facial expressions, they can’t tell whether a participant is still thinking or would like to say more. Some participants expected that webcam use would allow for facial-expression analysis — something current AI interviewers cannot do. Until that becomes possible, one participant suggested a button that participants could click to indicate when they were ready for the next question.

Sycophancy Is Common

AI interviewers are not immune to sycophancy. Human interviewers will thank participants for their contributions and sometimes make remarks like “That’s interesting.” AI interviewers used these techniques – although indiscriminately.

Several participants commented on the AI interviewer’s overly enthusiastic responses, which made it feel fake and disingenuous.

“It’s like: ‘Wow, brilliant, great idea. Wow, what an insight’ (…) No one acts like this. If we see a person act like this we say okay, sociopath (…) It’s too much, too reactive, too thankful.”

“I gave a couple answers that I would consider (…) mundane, and it was (…) very enthusiastic about telling me how interesting they were.”

“I did feel like it was doing that AI thing where it was like, ‘Rah, rah, you’re such a great participant,’ and I was (…) rolling my eyes a little bit because it would say things like (…) ‘Fascinating. That’s so interesting. Wow!’ (…) It felt a little bit too positive because I wasn’t saying anything groundbreaking.”

Can AI Interviewers Replace Human Interviewers?

It’s useful to separate this question into two related questions:

- Can AI replace some human-led interviews?

- Can AI interviewers eliminate the need for human interviewing altogether?

The short answer to the first question is that AI interviewers can replace humans in some cases — specifically, in structured interviews

Structured interviews follow a predefined guide, ask each question largely as written, and introduce probing followup questions when responses appear incomplete. The AI interviewers we evaluated in this study (and the ones currently on the market) run structured interviews.

Currently, AI interviewers cannot meaningfully adapt the interview: they do not create new questions, reorder or skip questions, or adapt questions that seem irrelevant. This led some participants to experience the questions as repetitive or redundant. It also left some participants feeling that they had little control over the interview’s trajectory.

One participant contrasted this experience with a human-moderated interview:

“When you are a participant [in a human-moderated interview], (…) you do have some level of control over the conversation. And with a machine, I feel like I lose it.”

Such reactions reflect a broader issue: structured AI interviews give participants little ability to shape the conversation as it unfolds.

Structured interviews are well suited for collecting standardized data from large numbers of participants, especially when the problem space is well understood — and in these cases, AI interviewers can plausibly replace human moderation.

However, semistructured interviews — often used in discovery — are more useful when studying unfamiliar problem spaces.

A semistructured interview allows the interviewer to adapt to new and interesting information that is relevant to the research questions. Running a successful semistructured interview requires continuous tradeoffs: Should you probe further or move on, given the remaining time? Should you follow the guide or pursue an unexpected but promising insight? Making these judgments depends on domain knowledge, research goals, and situational judgment.

When asked whether she’d consider using an AI interviewer in their research, one participant asked:

“Would it go off script and dig deeper to … [get] a deeper, richer understanding of one question or one problem space? Would it do that? Could it do that?”

The short answer to this question is no. Current AI interviewers are not yet capable of conducting semistructured interviews, and it may be some time before they can do so at a level comparable to a trained professional.

Our participant, who was a research leader, reflected on whether the AI interviewer could be used for his team’s projects:

“I’m pretty skeptical [about the use of AI interviewers] — just because the type of research that my team does requires so much subject matter expertise, and we need to be able to [ask] like very, very targeted and specific questions [which require] (…) a lot of background knowledge about the client or user.”

You might wonder whether providing more context or autonomy to the AI could solve this problem. In practice, this is difficult. Researchers cannot anticipate in advance which topics participants will surface or which domain knowledge will be relevant. As a result, it’s nearly impossible to predefine all the guidance an AI interviewer would need.

“I think [it might be better for] things that are very procedural (…) But for generative research, where people can go off the rails, (…) if you don’t get your AI set up right, it’s not going to get the nuance (…) It’s probably not appropriate for that.”

Moreover, the constraints of structured interviewing are what make AI interviewers viable in the first place. Large language models tend to perform best when tasks are tightly bounded and success is clearly defined (for example, “If the user mentions X, then ask Y”). Increasing an AI interviewer’s autonomy would likely reduce performance.

Until AI interviewers can consistently make the same nuanced tradeoffs that professional interviewers do, structured interviews will likely remain the practical ceiling for most AI interviewer products.

Use Cases for AI-Moderated Interviews

Based on this study and our assessments of these tools, we highlight four use cases for where AI interviewers can be useful.

Product-Related Feedback

Product-feedback interviews are typically highly structured, with predefined questions and limited need for exploratory followup, making them a good fit for AI moderation. This is especially true for post-launch or beta feedback, when teams need quick input on what’s working, what’s confusing, and what needs fixing.

Short AI-moderated interviews could be a strong alternative to surveys for gathering structured product or feature feedback. Instead of sending an online questionnaire, teams could collect detailed user input through AI-moderated interviews.

An Option for Teams with No Researchers

AI moderation could help teams without dedicated researchers talk to their users without extensive interview training. Researchers may still need to help create a solid interview guide, but that’s far less work than recruiting, scheduling, and conducting interviews themselves.

Teams that currently do no user research at all or rely only on occasional surveys can benefit from AI-moderated interviews as a practical stepping stone. They won’t match the depth of an expert-led interview, but they can still surface clearer explanations and examples than surveys alone.

Translated Interviews

AI interviewers let teams run interviews in languages they don’t speak — without hiring a bilingual researcher or translator. For many organizations, this solves a long-standing obstacle to studying and designing for their full customer base.

With AI transcription, the interview transcript can be translated into English (or your language of choice) for analysis.

In one AI-moderated interview, an Argentinian participant asked Marvin to switch to Spanish, and the entire interview was conducted in Spanish.

Marvin: “Let’s start off with this. Could you tell me about your role in research operations and which teams you support?”

Participant: “OK. Puedes hablar en español?”

Marvin: “Claro, sí, puedo hablar en español sin problema. Entonces vamos a continuar en español. Como te mencioné, esta conversación se está grabando.”

The participant said that Marvin’s Spanish was quite good. While it occasionally switched between Argentinian and Mexican Spanish, the participant was able to understand it at all times.

Recruitment Screening

Recruitment-screening interviews are, by design, structured and evaluative, which makes them especially well-suited for AI moderation.

One participant said that AI interviewers could be extremely useful at the screening stage, where it’s essential they find the right participants. A short AI-moderated interview could quickly determine eligibility and save recruiters valuable time that would otherwise be spent calling candidates.

When to NOT Use an AI Interviewer

AI-moderated interviews are a poor fit when you need to interpret nuance, draw on deep context, or adapt heavily in the moment. Avoid using an AI interviewer in the following situations:

- When the topic requires deep domain knowledge. If your participants use specialized terminology, acronyms, or industry jargon, an AI interviewer may miss important details or fail to recognize when a response deserves followup. (While you could give the AI interviewer some context, it will be hard to include all the necessary context.)

- When you’re exploring a problem space you don’t understand yet. Early discovery interviews often require the interviewer to notice unexpected but relevant threads and reshape the line of questioning as they learn. If you can’t anticipate what to probe for ahead of time, it’s difficult to configure an AI interviewer to do the right thing.

- When you expect each interview to take a different path. Some studies involve participants with widely different roles, workflows, or contexts — where the best followup questions depend heavily on what each person reveals. AI interviewers apply the same structure across sessions, which can result in repetitive or irrelevant questions and missed opportunities to go deeper.

- When the research might involve showing, not just telling. If you need participants to share their screen and walk you through a workflow or demonstrate how they perform a task, an AI interviewer won’t be able to observe behavior and ask relevant questions in response to what participants are doing.

About Our Study

We recruited 10 participants, sampled from 8 countries across North and South America, Europe, and Asia. Our study examined how teams incorporate AI into research and operations. Participants were research leaders and ResearchOps professionals — more familiar with AI than the general population and more experienced with interview moderation.

While the sampling choice may mean that some of our findings may not generalize fully to the general population, it also allowed us to evaluate AI interviewers through the lens of experienced moderators and have them contrast this experience with interviews they’d observed or conducted in the past.

We used two AI-interviewer tools for this study: Marvin and UserFlix; they were provided with the same interview guide. While the interface for each AI moderator was slightly different, the interview experience was very similar.

Participants were assigned to one of the tools. None had previously used these AI interviewers; a few had experienced a demo version, but no one had participated in or run an AI-moderated research study. Two participants had heard of AI interviewers being piloted in their organization.

Conclusion

AI-moderated interviews are best used as a complement to human moderation, not a replacement for it. Today, they work well for collecting structured feedback at scale — such as post-launch product feedback, recruitment screening, or interviews that require consistent questioning across participants.

However, AI interviewers still struggle with the skills that make human-led discovery interviews effective: adapting in real time, making nuanced tradeoffs, and picking on subtle cues. As a result, they are not yet suitable for semistructured or in-depth interviews, where following unexpected insights is critical.

These tools are still new and will continue to improve. Over time, participants may grow more comfortable sharing information with them. Even so, replacing human interviewers would require AI systems to consistently exercise the same judgment, domain knowledge, and situational awareness that trained researchers bring to interviews — abilities that remain out of reach for now.

We thank Marvin and UserFlix for allowing us to use their products for this study. This study was not sponsored or compensated by either company.